First written: 22-11-28

Translated: 25-02-06

Uploaded: 25-02-06

Last modified: 25-01-14

My first met to Nginx was my first project. At first, I decided to deploy my service at Heroku. But Heroku had a problem when I tried to set https. Also Heroku had been off periodically if there was no visitor bacause I used free plan. I check AWS, which is very famous until now, but I was afraid of few cases of being overcharged.

The next service I met was DigitalOcean, which is not famous and had little information. I was happy about the cheap basic fee and their service that only provides a simple Linux server computer, which seemed like an advantage because I can make my service in the direction where I want without any limits from the service provider, which meant that I needed to learn many things. So I decided to use DigitalOcean, and it cost almost a month just to deploy.

Nginx was one of necessary service that I've had to build by myself. At that time, after long time of copy-and-paste code, I've made it watching a video of my favorite YouTuber not knowing exactly what I was doing. In hindsight, I set my server as Reverse Proxy Server, although I was just happy that my server was running properly.

I wanted to study Nginx to know exactly what I've done, but not doing it. After some time has passed, I was asked some questions about Nginx when I was working as an assistant of the educational program of web development. Those questions are simple because that was basic, but I thought that was an opportunity to study Nginx. I also wanted to make a mail server or multi-domain website, so it looked like a good chance.

It's the first essay of Nginx summary. Here I introduce basic directives that developers usually meet when they provide web service using Nginx. Maybe second essay would cover 'Reverse proxy'.

We can divide applications according to location of execution or existence of interaction with the user. There are apps that interact with the user in the front, and there are apps that support other apps in the back. We call the latter, 'Daemon', where Nginx belongs. Common daemon has last letter 'd' like "named", "crond", "httpd", which Nginx doesn't have.

In Ubuntu, the main setting file of Nginx is located in the directory /etc/nginx, named nginx.conf. If you installed it using apt-get command, the file location is /usr/local/nginx. This file include many additional setting files like below.

include /etc/nginx/modules-enabled/*.conf;

include /etc/nginx/sites-enabled/*;

The wildcard letter is so common in this file. If the path after include has wildcard letter, there would be no error even when file doesn't exist. If the path doesn't have wildcard letter, the error occurs when file is not at the exact path.

Syntax: user user [group];

Default: user nobody nobody;

Nginx consists of a master process, which is executed in root, and a worker process. A 403 Forbidden error occurs when the master process is not executed in root. So user set authorization of worker process, not for the master process.

Therefore, if permissions of the worker process are set to root, like user root; or user root root;, it's better to create a new user, that has no permission to connect to shell, and change it.

$ useradd --shell /usr/sbin/nologin www-data

user www-data;

Syntax: worker_processes number | auto;

Default: worker_processes 1;

Decide a number of worker processes. The number is decided by the load pattern, the number of hard disks and most importantly, the number of CPU cores. But if you have no idea, it's better to set the same number of CPU cores, or set auto(maybe the best solution).

worker_processes auto;

Set the path of the pid file of Nginx deamon. Usually it's better not to change it because it's determined at compile time and can be used in the 'init' script of Nginx.

Syntax: worker_connections number;

Default: worker_connections 512;

Set the number of connections that one worker process can handle simultaneously. If worker_proecesses set as 4 and worker_connections set as 1024, it means that 4096 connections can be handled simultaneously. These connections include not only connections with the client, but also connections with the proxy server, etc.

worker_connections 1024;

Syntax: multi_accept on | off;

Default: multi_accept off;

This directive decides whether the worker process will accept only one new connection at a time, or whether it will accept multiple new connections at once.

multi_accept on;

It's the part where developer sets HTTP headers. It contains X-Frame-Options, X-Content-Type-Options, X-XSS-Protection, Strict-Transport-Security, etc. I think it would be better to cover all of these headers when writing an article related to security rather than Nginx.

add_header X-Frame-Options SAMEORIGIN;

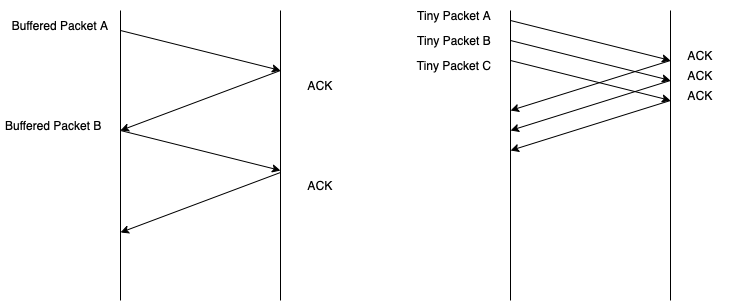

The Nagle algorithm has been made to reduce network load by reducing the number of packets. Like the right-hand side of the above picture, the server sends packets without waiting for ACK from the client, which reduces waiting time of the client, when Nagle is off. The disadvantage is a possibility of network load because of many packets of small size that are sent frequently. The size of the TCP header alone is 40byte (20byte for the header, 20byte for the IPv4 header).

But when Nagle is set to 'on', the server accumulates data in the buffer until it receives an ACK signal from the client. The server sends data when it exceeds MSS (Maximum Segment Size) or an ACK comes. It reduces the load of the network, but speed also is reduced, which means that the developer should use this option only when it's needed.

Nagle algorithm pseudo code below.

#define MSS "maximum segment size"

if there is new data to send

if the window size >= MSS and available data is >= MSS

send complete MSS segment now

else

if there is unconfirmed data still in the pipe

enqueue data in the buffer until an acknowledge is received

else

send data immediately

end if

end if

end if

Decide whether to send the file using sendfile() method or not. By copying files inside the kernel instead of read() and write() methods, you may see an improvement in speed.

sendfile on;

Decide whether to use TCP_CORK in Linux, a socket option. In FreeBSD, it is applied to the TCP_NOPUSH option. From Linux 2.5.71, it can be used along with TCP_NODELAY. You only can activate this option when you're using sendfile() method.

If your server uses TCP_CORK, it sends data when the size of data reaches MSS or timeout (200ms) occurs after the request. It looks very similar to Nagle Algorithm.

The only significant difference to the Nagle algorithm is whether or not it responds to ACK. the Nagle algorithm sends data when it gets every ACK, although TCP_CORK sends data after a timeout occurs if the size of the data doesn't exceed MSS.

tcp_nopush on;

It means not using the Nagle algorithm.

tcp_nodelay on;

While researching optimization, related concepts such as Silly Window, Window Size, writev(), and three-way handshake emerged, but I stopped because I thought it would be enough to understand just the directives and concepts above, for web server developers.

But I should mention this: if the server uses sendfile, tcp_nopush and tcp_nodelay, there is an advantage from an optimization perspective. First, tcp_nopush with sendfile check whether the packet is full before sending a packet to the client, which reduces network overhead and increases the speed of sending files. When the last packet is left, Nginx can save up to 200ms per file by using tcp_nodelay instead of tcp_nopush when forcing the socket to send data.

When making a website, you will find that numerous files, such as index.html, style.css, favicon.ico, and banner.png, must be sent from the server just to view one small page (if you want to see it, turn on the developer tool and then refresh the page). Such cases are becoming more common as the web market grows and the needs of users vary. In this situation, if a three-way handshake is established in which SYN and ACK are exchanged to establish a TCP connection every time, the following occurs.

Client : "Hello, I'm Client. You hear me?"

Server : "Hi, I'm Server. I hear you. Are you ready to receive data?"

Client : "Yes, I'm ready. Please send me index.htm."

Server : "Here is index.html."

Client : "Hello, I'm Client. You hear me?"

Server : "Hi, I'm Server. I hear you. Are you ready to receive data?"

Client : "Yes, I'm ready. Please send me style.css.

Server : "Here is style.css."

Client : "Hello, I'm Client. You hear me?"

...

Persistent Connection was made to reduce the number of greetings.

Client : "Hello, I'm Client. You hear me?"

Server : "Hi, I'm Server. I hear you. Are you ready to receive data?"

Client : "Yes, I'm ready. Please send me index.html."

Server : "Here is index.html."

Client : "How about style.css"

Server : "Here is style.css."

Client : "How about favicon.ico?"

...

Appropriate Persistent Connection reduces network cost, load, and latency.

Syntax: keepalive_timeout timeout [header_timeout];

Default: keepalive_timeout 75s;

keep-alive of HTTP helps persistent connection. It is supported starting from HTTP/1.0, and starting from HTTP/1.1, Connection: keep-alive is set by default. keepalive_timeout option decide how long keep-alive would be maintained. Excessively long keep-alive time means sometimes the server would grab unnecessary connections.

keepalive_timeout 75;

Syntax: types_hash_bucket_size size;

Default: types_hash_bucket_size 64;

Syntax: server_names_hash_bucket_size size;

Default: server_names_hash_bucket_size 32|64|128;

Nginx uses a hash table to collect static data such as server name, directive values, and names of request header strings. A sufficient value is basically recommended for server_names_hash_bucket_size for the case of a long domain name or registration of a virtual host domain. Default value is recommended for types_hash_bucket_size.

types_hash_bucket_size 1024;

server_names_hash_bucket_size 128;

Syntax: server_tokens on | off | build | string;

Default: server_tokens on;

Decided whether to represent the Nginx version in the error page or server response header field. It's recommended to set 'off' for the security.

server_tokens off;

Syntax: server_name_in_redirect on | off;

Default: server_name_in_redirect off;

This option is used when renaming the URL path internally. https://example.com/article-Best-Way-To-Make-Milk-By-Back-Howard is better than https://example.com/article?id=10&author=Back+Howard in terms of UX and SEO, and renaming the URL helps in this case. If set as 'on', when renaming the URL, Nginx uses the host name initially set in server_name, and when it is set as 'off', it uses the host name of the HTTP request header.

server_name_in_redirect on;

Syntax: ssl_protocols [SSLv2] [SSLv3] [TLSv1] [TLSv1.1] [TLSv1.2] [TLSv1.3];

Default: ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

Specifies the TLS protocol to be used in the connection.

ssl_protocols TLSv1.2 TLSv1.3

Syntax: ssl_prefer_server_ciphers on | off;

Default: ssl_prefer_server_ciphers off;

Specify that the server cipher be used in preference to the client cipher when using SSLv3 or TLS protocol.

ssl_prefer_server_ciphers on;

Syntax: ssl_ciphers ciphers;

Default: ssl_ciphers HIGH:!aNULL:!MD5;

Specify available ciphers. It should be in a format that the OpenSSL library can understand.

ssl_ciphers ALL:!aNULL:!EXPORT56:RC4+RSA:+HIGH:+MEDIUM:+LOW:+SSLv2:+EXP;

Syntax: ssl_session_timeout time;

Default: ssl_session_timeout 5m;

Specify the time to allow the client to reuse session parameters.

ssl_session_timeout 10m;

This is a function that leaves logs of who connected when, what kind of errors occurred, etc. It is best to set these options to each server, and it is also a good idea to separate the log file path for each server. It's possible to specify log level and format.

Syntax: access_log path [format [buffer=size] [gzip[=level]] [flush=time] [if=condition]];

access_log off;

Default: access_log logs/access.log combined;

access_log /var/log/nginx/MyServer1/access.log

Syntax: error_log file [level];

Default: error_log logs/error.log error;

error_log /var/log/nginx/MyServer1/error.log

Syntax: gzip on | off;

Default: gzip off;

Send a response after compression.

gzip on;

Syntax: root path;

Default: root html;

Set the root directory when the request comes. The same option also exists in the location block.

root /usr/share/nginx/html;

Syntax: index file ...;

Default: index index.html;

Specify the name of the index file. The same option also exists in the location block.

index index.html index.htm;

Syntax: server_name name ...;

Default: server_name "";

This directive helps to service various domain names at one IP. The server checks this directive with the request header and sends the request to the appropriate domain.

server_name example.com www.example.com;

Syntax: proxy_pass URL;

Default: —

Decide where to map the request using the directive server_name and the specified path at the location block. You can specify the domain name, IP, port, etc.

For example, if the options are set like below and a user connects to www.example.com/api, the request of the user goes to the index page of the 8000 port of the server.

server_name example.com www.example.com;

location /api {

proxy_pass http://127.0.0.1:8000/;

}

I didn't use it yet but in some cases, the directive upstream is used. The code using both proxy_pass and upstream is below.

upstream backend {

server http://127.0.0.1:8000/;

}

server {

server_name example.com www.example.com;

location / {

proxy_pass http://backend;

}

}

There is no advantage to using upstream in this case because those two codes above do the same thing. But the example below is where upstream used perfectly.

upstream backend {

ip_hash;

server backend1.example.com/ weight=3;

server http://127.0.0.1:8001/;

server http://127.0.0.1:8002/ down;

server http://127.0.0.1:8003/ max_fails=3 fail_timeout=30s;

server unix:/tmp/backend3;

keepalive 32;

sticky cookie srv_id expires=1h domain=.example.com path=/;

}

server {

server_name example.com www.example.com;

location / {

proxy_pass http://backend;

}

}

I didn't use the above code yet in real service, so don't copy it.

You can see several servers are running on the server with different ports and even using Unix-domain socket. The load balancing method is specified through the ip_hash directive, and weight is given to each server through the weight directive. Servers that are temporarily down can be displayed through the down directive, or how to handle connection failure can be set for each server using max_fails and fail_timeout directives. It's possible to set persistent connection using keepalive for each server, and clients who visited before can be directed to the same server using cookie directive.

Tons of features above show why the upstream block is so useful.

Syntax: proxy_http_version 1.0 | 1.1;

Default: proxy_http_version 1.0;

Set the HTTP protocol for the proxy.

proxy_http_version 1.0;

Syntax: proxy_set_header field value;

Default: proxy_set_header Host $proxy_host;

proxy_set_header Connection close;

Redefine or add the request header field of the proxied server.

Syntax: proxy_cache_bypass string ...;

Default: —

Specify conditions when the server don't make response using cache.

proxy_cache_bypass $http_upgrade;

$ sudo certbot --nginx

The command above issues an SSL certification key from Let's Encrypt and automatically writes some directives about SSL settings in the Nginx configuration file (listen 443, ssl_certificate, ssl_certificate_key, ssl_dhparam, ssl_session_cache, etc). I decide to skip the explanation of those directives because they are not that hard and important to explain in detail.

Book refs:

끌레망 네델꾸, 『NGINX HTTP SERVER』, 에이콘, 2020.

Website refs:

nginx documentation

nginx optimization understanding sendfile tcp_nodelay and tcp_nopush

keep alive란? (persistent connection에 대하여)

TCP_CORK: More than you ever wanted to know

stackoverflow: Is there any significant difference between TCP_CORK and TCP_NODELAY in this use-case?

NAGLE 알고리즘과 TCP_CORK

Copyright © 2025. moyqi. All rights reserved.